Ever since the Presidential elections, every one I know seems to be worrying about their social media echo chamber. And no one seems to see the irony of discussing it on Facebook.

Many of us seem to feel trapped in a filter bubble created by the personalization algorithms owned by Facebook, Twitter, and Google. Echo chambers are obviously problematic; social discourse suffers when people have a narrow information base with little in common with one another.

The Internet was supposed to fix that by democratizing information and creating a global village. Indeed, the democratization has occurred. Social newsfeeds on Facebook and Twitter and algorithms at Google perform the curation function that was previously performed by news editors. By some estimates, over 60 percent of millennials use Facebook as their primary source for news. But, in recent weeks, many commentators have voiced concerns that these trends, instead of creating a global village, have further fragmented us and are destroying democracy. Mark Zuckerberg, Facebook’s CEO, believes we are overestimating the impact of the social network on our democracy.

My collaborators and I have studied echo chambers for some time now. And I know Mark Zuckerberg is wrong. Facebook can do more to help us break free from the filter bubble. But we are not helpless within it. We can easily break free but we choose not to.

In studies in 2010 and 2014, my research group at Wharton evaluated media consumption patterns of more than 1,700 iTunes users who were shown personalized content recommendations. The analysis measured the overlap in media consumed by users—in other words, the extent to which two randomly selected users consumed media in common. If users were fragmenting due to the personalized recommendations, the overlap in consumption across users would decrease after they start receiving recommendations.

After recommendations were turned on, the overlap in media consumption increased for all users. This increase occurred for two reasons. First, users simply consumed more media when an algorithm found relevant media for them. If two users consumed twice as much media, then the chance of them consuming common content also increased. Second, algorithmic recommendations helped users explore and branch into new interests, thereby increasing overlap with others. In short, we didn’t find evidence for an echo chamber.

But political content is different from other forms of media. For example, people are less likely to hold extreme views about or polarize over, say, music than political ideologies. Further, social newsfeeds are different from the personalized recommendations one might see on iTunes. So the question is whether our results generalize to social media as well.

The answer emerges from a 2015 study by researchers at Facebook who analyzed how the social network influences our exposure to diverse perspectives. The researchers evaluated the newsfeeds of 10.1 million active Facebook users in US who self-reported their political ideology (“conservative,” “moderate,” and “liberal”). The researchers then calculated what proportion of the news stories in these users’ newsfeed was cross-cutting, defined as sharing a perspective other than their own (for example, a liberal reading a news story with a primarily conservative perspective).

On a social network like Facebook, three factors influence the extent to which we see cross-cutting news. First, who our friends are and what news stories they share; second, among all the news stories shared by friends, which ones are displayed by the newsfeed algorithm; and third, which of the displayed news stories we actually click on. If the second factor is the primary driver of the echo chamber, then Facebook deserves all blame. In contrary, if the first or third factor is responsible for the echo chamber, then we have created our own echo chambers.

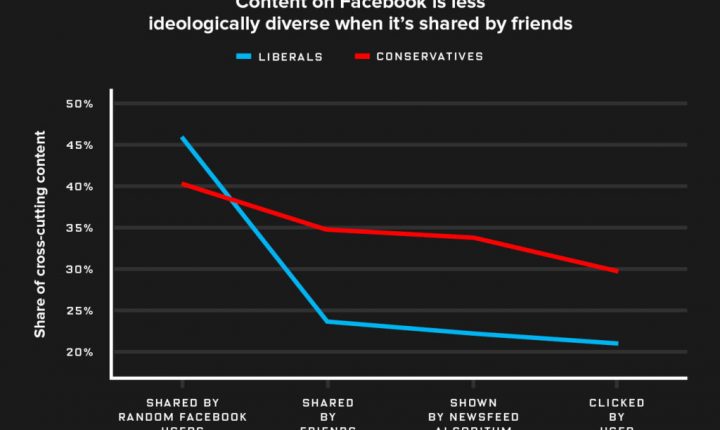

If we acquired our news media from a randomly selected group of Facebook users, nearly 45 percent of news seen by liberals and 40 percent seen by conservatives on Facebook would be cross-cutting. But we acquire these news stories from our friends. As a result, the researchers found that only 24 percent of news stories shared by liberals’ friends were cross-cutting and about 35 percent of stories shared by conservatives’ friends were cross-cutting. Clearly, the like-mindedness of our Facebook friends traps us in an echo chamber.

The newsfeed algorithm further selects which of the friends’ news stories to show you. This is based on your prior interaction with friends. Because we tend to engage more with like-minded friends and ideologically similar websites, the newsfeed algorithm further reduces the proportion of cross-cutting news stories to 22 percent for liberals and 34 percent for conservatives (see figure below). Facebook’s algorithm worsens the echo chamber, but not by much.

Finally, the question is which of these news stories do we click on. The researchers find that the final proportion of cross-cutting news stories we click on is 21 percent for liberals and 30 percent for conservatives. We clearly prefer news stories that are likely to reinforce our existing views rather than challenge them.

Source: “Exposure to ideologically diverse news and opinion on Facebook,” Science, 5 June 2015.WIRED

Source: “Exposure to ideologically diverse news and opinion on Facebook,” Science, 5 June 2015.WIRED

The authors conclude that the primary driver of the digital echo chamber is the actions of users—who we connect with online and which stories we click on— rather than the choices the newsfeed algorithm makes on our behalf.

Should we believe a research study conducted by Facebook researchers that absolves the company’s algorithms and places the blame squarely on us? I think the study is well-designed. Further, even though the studies I describe were conducted in 2015 or earlier, the underlying mechanisms investigated in these papers—design of personalization algorithms and our preference for like-mindedness—have not fundamentally changed. So I am confident that all three studies will share qualitatively similar findings in November 2016. That said, I disagree with a key conclusion of the Facebook study. It is true that our friendship circles are often not diverse enough, but Facebook can easily recommend cross-cutting articles from elsewhere in its network (e.g. “what else are Facebook users reading?”). That the news being shown our feeds is from our friends is ultimately a constraint that Facebook enforces.

That doesn’t mean you and I are acquitted. The primary issue is that we deliberately choose actions that push us down an echo chamber. First, we only connect with like-minded people and “unfriend” anyone whose viewpoints we don’t agree with, creating insular worlds. Second, even when the newsfeed algorithm shows cross-cutting content, we do not click on it. Why should Facebook be obligated to show content with which we don’t engage? Finally, Facebook has a business to run. If I appear to be satisfied on Facebook seeing news from sources I like, how is the echo chamber Facebook’s responsibility? Why should Facebook not optimize for their business if we are unwilling to vote with our clicks?

In The Big Sort, Bill Bishop shows how, over the last 30 years, Americans have sorted themselves into like-minded neighborhoods. The same appears to be happening on the web. All the empirical research to date suggests that the reason is not the use of personalization algorithms per se. Algorithms can easily expose us to diverse perspectives. It is the data being fed to the personalization algorithms and the actions we take as end users. Technology companies like Facebook and Google should do more. But so should you and I.

Go Back to Top. Skip To: Start of Article.